Over the last thirty years, the practice of benchmarking capital projects and performing statistical analyses to infer trends and best practices, has become a standard for the evaluation of capital project performance. Over that period, despite the emergence of many recommended best practices to improve project performance derived from benchmarking, major capital project outcomes remain stubbornly poor.

The Institute argues here that the promise of benchmarking methodology to infer the most relevant root causes for project underperformance is limited. There are two reasons. First, Era 1 & Era 2 project thinking [1] dominates conventional wisdom in hypothesizing root causes of project underperformance. The true underlying root causes can be arrived from a deeper Operations Science analysis offered by Project Production Management (PPM).

The second reason is that correlation is not causation: inferring trends and best practices through statistical correlation may well identify practices common to “successful” projects. However, it does not guarantee that performing those practices will assure project success. In other words, benchmarking can help to identify practices which may well be necessary, but which are by no means sufficient for successful project performance.

We compare benchmarking with Project Production Management, which in contrast is a technical framework that applies Operations Science theory to understand and control project execution in an entirely predictable manner.

Keywords: Capital Project; Benchmarking; Statistical Test; Correlation; Three Eras

The practice of benchmarking projects, performing careful statistical analysis to understand factors for project success and failure, has become a standard method for assessing project performance over the last thirty years. Institutions such as Independent Project Analysis Inc., have become respected reference institutions for compiling data and statistics on capital project performance across a variety of industries. There is an extensive academic and industry practice literature that has emerged around the area – a representative but by no means comprehensive set of references is [1] [2] [3] [4] [5] [6] [7] [8]. The book by Merrow [9] is probably one of the foremost references in the area.

Thirty to forty years is a substantial period of time over which identified best practices to improve project performance should have resulted in a substantive improvement in capital project performance. Yet, it is universally acknowledged in recent published literature, most notably [10], that capital project outcomes remain poor. There might be a number of reasons for this persistent underperformance. Perhaps best methods and practices have not been implemented, or incorrectly implemented, or perhaps not had sufficient time for improved results to become apparent in the data. Perhaps the identified ways to improve are not sufficiently specified, or perhaps as described not feasible to implement. Perhaps projects have increased in scope and complexity, outstripping the potential of best practices to improve performance. Or perhaps the proposed methods and practices do not address the underlying root causes of project underperformance.

The Institute’s position, described here, is that while benchmarking has valuable uses, it has not been effective in identifying routes to significantly improve project performance. This is not to minimize or disparage the value of benchmarking – almost certainly a number of emergent practices improve project performance at the margin. However, the critical point is that the statistical analysis of project performance has not been successful in identifying root causes of poor project performance. Since the correct root causes have not been identified, benchmarking has not provided changes in project execution that can reliably and predictably improve project delivery.

It is the Institute’s position that one reason is that Era 1 and Era 2 project management thinking [11] dominates the thinking behind hypothesizing root causes for project underperformance. We believe that an Era 3 analysis of projects using the PPM technical framework identifies not only root causes, but also verifiable steps for improvements. We conclude by noting that benchmarking is usefully complemented by the PPM technical framework, where the underlying operations science provides the necessary theory to both understand and predict how to improve project execution and delivery.

There are a number of directions for project performance improvement that have been described in the benchmarking literature. They are diverse in nature, but we can summarize them broadly in two different categories of Era 2 project management thinking. One class of recommendations is broadly concerned with systems and processes of project management governance. Another class of recommendations is broadly concerned with improving human collaboration in teams. We have not come across any recommendations in the benchmarking literature that address the “how” of work execution – understanding the physics of the interconnected sequences of work activities and how to optimize the execution of those work sequences.

An influential example is the work of Merrow et al. [9] [10] [12]. In [10], there is one overarching theme, and three subordinate conclusions. The overarching theme is an observation of the inability of project owners to “effectively integrate all the functions needed to produce projects.” This is a broad statement – in one sense it might be interpreted as an argument for an Operations Science approach to synchronize all the different activities by diverse stakeholders in a project. However, an examination of the literature reveals that there is no such precise idea in mind. Merrow in [10] lists three subordinate directions to achieve better integration: more attention to Front-End-Loading (FEL); continuity of project leadership; taking a less aggressive approach to scheduling than currently is believed to be the practice. We describe each of these in the subsequent paragraphs, outlining the limitations of each.

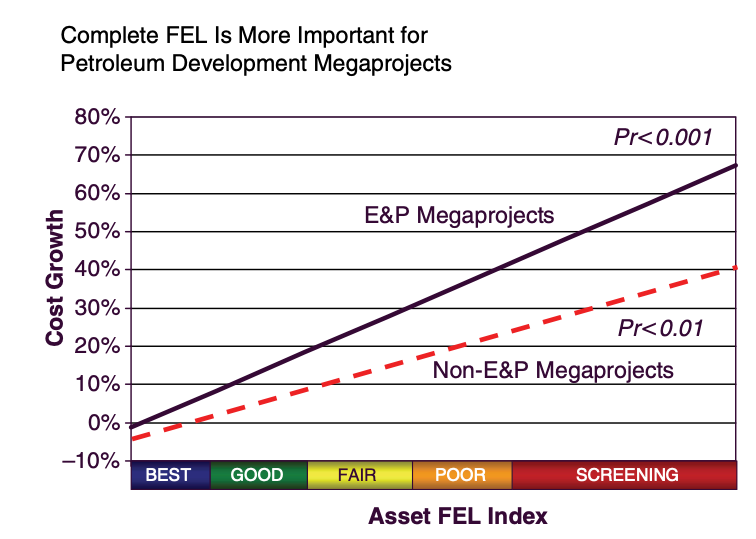

The first proposed area to improve “integration” is to have more complete Front-End Loading. In other words, project scopes need to be more complete – the case being made here is that insufficient front-end work causes cost and schedule overruns as project scopes need to be inevitably finalized over the course of project execution. The argument is that project governance mechanisms put pressure on project teams to get to execution, who do not sufficiently define project scopes. Figure 1 shows the evidence made in [11] to support this argument, illustrating the correlation between completeness of front-end work and project cost. Completeness of front end work is measured by a subjective numerical scale, and is correlated across a population of capital projects with project costs. It is clear that less complete front-end loading is strongly correlated with project cost overruns. It does not follow however, that the converse is true – more complete FEL does in itself assure cost overruns will be eliminated. We would point out that an alternative would be to design the production system to handle the variability intrinsic with project scope as projects evolve, rather than assume that it is even feasible to lock project scope upfront.

Figure 1: Taken from Merrow [11] showing the correlation between completeness of FEL and project cost

Figure 1: Taken from Merrow [11] showing the correlation between completeness of FEL and project cost

A second proposed hypothesis is continuity of (or lack thereof) project leadership. This factor is again concerned with the human element in forming project teams. Again authors have found strong correlations between capital project performance and whether there is continuity of project leadership. It is certainly reasonable to assume that the additional work of change management, as skills and personnel turn over in a project will cause cost and schedule overruns, especially if not properly accounted for. It does not follow however, that assuring continuity of leadership will assure superior project performance.

A final proposed root cause is the “drive for speed” – that proposed schedules for capital projects are too aggressive, and inevitably set up the project team for failure. The Institute has perhaps the most disagreement with this proposal, based on the reasoning given for a “realistic schedule” [11, p. 41]. The argument here is that if a proposed schedule is significantly more aggressive than the industry average, derived of course from benchmarking a population, that is almost certainly a predictor of cost and schedule overruns. Indeed, it might well be, if the project is based on Era 1 and Era 2 project schedule thinking.

The Institute believes there are flaws in this reasoning. First, there is no scientific basis provided for whether a schedule is reasonable or not, except to measure whether others have achieved comparable results in the past. To paraphrase an Institute Industry Council member from a large supermajor oil & gas operator, “we are probably all very incompetent, and it’s not satisfying to say that we are the best of a bad bunch.” As the Institute has shown in other case examples, an Operations Science analysis of conventional scheduling and project work breakdown structures reveals ample use of time and inventory buffers that are inefficiently placed to manage the variability that the project encounters. In other words, there have been cases where schedules can be dramatically reduced, without jeopardizing project performance, through the more considered scientific approach to controlling project execution via PPM.

The limitation of hypothesizing causes of project underperformance through statistical correlation is that it does not prove any causal relationship between the proposed causes and project underperformance. So while statistical analysis may reveal necessary conditions for satisfactory project execution, there is no assurance that they are sufficient conditions. One might try to address all these factors, and still have project underperformance. Indeed, this is what the Institute believes has happened – in the absence of a deeper causal analysis of what causes project underperformance, industries have been unsuccessful in achieving improvements in project performance, despite great efforts.

A common theme in most if not all proposed root causes of project performance is that they are all about one form or other of variability encountered during project execution. Understanding that variability is at the root of many proposed causes for project underperformance motivates the Institute’s argument that Project Production Management, with its Operations Science underpinnings, provides deeper insight into fixing project underperformance.

As argued in [1], Era 1 and Era 2 project thinking doesn’t contemplate variability, or at least not in a systematic manner. The best that it does is in the ad hoc allocation of contingencies in project schedule and budget to account for the different sources of variability. Operations Science [13] offers a deeper understanding of how to most effectively buffer variability and to control work activities as they are affected by variability. The supporting evidence for this argument is the set of numerous case examples described in previous work by numerous practitioners at the PPI Symposium and previous editions of

the Journal.

We have described the limitations of benchmarking to identify root causes for capital project underperformance. A primary reason for these limitations is the Era 1 & Era 2 perspective of conventional project management, which constrains the universe of hypotheses that are considered as root causes for project underperformance. A second reason is that benchmarking intrinsically reveals correlation, but does not prove causation. Project Production Management offers a deeper understanding on how to execute and control work execution through Operations Science. It is therefore a route to achieving superior project performance.